Tackling AI’s Reasoning Paralysis

Neuro-Symbolic Heuristics and SEAL’s Self-Adaptation Tackle ARC Challenges

Summary:

Key to unlocking true intelligence is overcoming "reasoning paralysis, "where AI struggles with complex, multi-step tasks. This article explores two promising approaches that may help bridge this gap:

Neuro-symbolic computing, which blends neural pattern recognition with heuristic-driven symbolic logic;

MIT’s Self-Adapting Language Model (SEAL), which generates its own training data to adapt rapidly to new tasks.

Both were tested using Chollet’s Abstraction and Reasoning Corpus (ARC), a benchmark designed to evaluate how well AI can generalize and reason from limited examples. Neuro-symbolic methods achieve 20–50% accuracy on ARC, while SEAL reaches 72.5% on a curated subset but ~30–40% on broader tasks. Despite challenges like high computational demands and “catastrophic forgetting” remain, these innovations represent meaningful steps toward more adaptable, robust, and potentially general-purpose AI systems.

1. Introduction: The Challenge of AI Reasoning

Recent advances in Artificial Intelligence (AI), driven by Large Language Models (LLMs) [1] like ChatGPT and DeepSeek, and emerging Large Reasoning Models (LRMs) [2], designed to solve complex, multi-step tasks, have led to impressive capabilities in natural language understanding and generation. However, even state-of-the-art systems often falter when faced with abstract problems that require structured reasoning. This phenomenon, which I've called "reasoning paralysis” [3], refers to AI’s inability to generalize from limited examples or adapt dynamically to novel problems. This limitation is also highlighted in recent Apple research (Shojaee et al., 2025) [4].

Building on my last post about AI's "reasoning paralysis," this post examines two distinct strategies for addressing this challenge:

Human-inspired heuristic reasoning through neuro-symbolic integration [5], which combines neural pattern-finding with rule-based symbolic logic. Although many neuro-symbolic approaches use heuristics, not all heuristic methods are strictly neuro-symbolic.

MIT’s SEAL framework [6], a self-editing language model capable of generating its own training data and adapting rapidly to new tasks.

2. The ARC Benchmark: A Real Test of Reasoning

François Chollet’s Abstraction and Reasoning Corpus (ARC) [7] is a widely recognized benchmark for testing abstract reasoning in AI. ARC consists of about a thousand visual grid puzzles, each providing just a handful of input-output examples. The real challenge for AI is to figure out the underlying rules and apply them to new, unseen puzzles, much like how humans can learn a pattern from just a few clues and then generalize it. Unlike memorization-based tasks, ARC tests true reasoning. Other benchmarks, like the Boswell Test [8], similarly probe abstract reasoning but emphasize diverse problem types, broadening the evaluation of AI generalization. Humans score around 80% accuracy on average, while current AI systems typically achieve between 20–40%, depending on the method used and the specific subset of ARC tasks evaluated (see ARC Prize competition [9]).

3. Heuristic Reasoning: Borrowing from Human Intuition

Humans solve problems using heuristics [10], or simply intuitive shortcuts derived from experience and rules of thumb. By embedding similar strategies into AI, we can enable more structured, goal-directed reasoning beyond brute-force computation. This approach is especially valuable in areas like visual puzzles or advanced mathematics, where pure pattern matching fails. ARC is a prime example of demanding the inference of abstract transformation rules, such as rotation, mirroring, or color mapping [7], from just a few input-output pairs.

3.1 Neuro-Symbolic Integration: Where Patterns Meet Logic

Neuro-symbolic methods combine the best of both worlds: the powerful pattern recognition of deep learning with the interpretability and logical consistency of symbolic reasoning; namely, using explicit rules, like a math formula or a set of instructions (of if-then statements for grid transformations) (Wang et al. 2022 [5], Colelough et al. 2025 [11]). These hybrid architectures help AI generalize, explain its decisions, and reason more effectively. However, not all neuro-symbolic methods use heuristics, and not all heuristic-driven systems are strictly neuro-symbolic. In this post, I focus specifically on those neuro-symbolic methods that leverage intuitive rules to guide symbolic reasoning for better generalization and interpretability, especially in challenging tasks like ARC.

In recent work, Bober-Irizar et al. (2024) [12] used a neuro-symbolic ensemble of three algorithms on ARC, achieving 37% accuracy on the ARC-Hard evaluation set, surpassing previous baselines (20–30%) but still trailing human performance (~80%). Batorski et al. (2025) [13]) further improved results with neuro-symbolic program synthesis, showing how explicit rule-based structures can guide learning in abstract domains and even secure a competitive ranking on the ARC train set, as tracked by the ARC Prize competition [9].

3.2 Key Advantages of Heuristic Neuro-Symbolic Methods

While neuro-symbolic approaches are diverse, those that leverage heuristics often share several key advantages:

Interpretability : Clear decision paths allow for debugging and validation.

Data Efficiency : Require fewer examples due to built-in logic.

Robustness : Less prone to overfitting or hallucination.

These strengths make neuro-symbolic methods especially well-suited for abstract grid-based reasoning tasks like those found in ARC.

4. SEAL: MIT’s Self-Editing Language Model

A major leap forward in adaptive AI comes from MIT’s SEAL (Self-Adapting Language Model) framework (Zweiger et al., 2025) [6], which introduces a new form of self-directed learning:

Key Features of SEAL

Self-Editing : Generates its own training examples and instructions, significantly reducing reliance on external supervision.

Reinforcement Learning : Improves through trial and error, continuously refining itself.

Few-Shot Adaptation : Learns effectively from small amounts of data, making it ideal for niche or evolving tasks.

SEAL achieved a notable 72.5% accuracy on a specially selected group (or a curated subset) of ARC tasks, far outperforming prompt-based learning (5-10%) and basic self-edits (~20%). However, its performance on the full ARC dataset is lower, typically 30–40%. Although this is a significant step forward in autonomous learning and generalization, it’s important to note that these results are based on a targeted evaluation set; whether SEAL can generalize across the entire ARC remains an open question.

4.1 SEAL and the Future: Learning Transfer and Generalization

SEAL aligns with Ray Kurzweil’s “learning transfer” concept [14], where AI applies knowledge across domains, a cornerstone of Artificial General Intelligence (AGI) [15]. This mirrors breakthroughs in systems like AlphaGo’s evolving into MuZero and AlphaDev [15], where learned strategies are generalized across games and problem types. Both SEAL’s self-adaptive learning and neuro-symbolic reasoning are distinct yet crucial advancements, each pushing us closer to AI systems that are flexible, interpretable, and capable of reasoning, adapting, and explaining their decisions.

4.2 Challenges: Efficiency and Forgetting

Despite its promise, SEAL faces notable challenges:

Computational Demands : Self-editing cycles require substantial compute resources, limiting scalability.

“Catastrophic Forgetting” : As the model adapts to new tasks, it risks overwriting previously acquired knowledge, impacting long-term reliability. Though this is a well-known challenge in continual learning, it is especially relevant for highly adaptive models like SEAL.

These issues highlight the need for further research into memory retention mechanisms and efficient reinforcement learning pipelines.

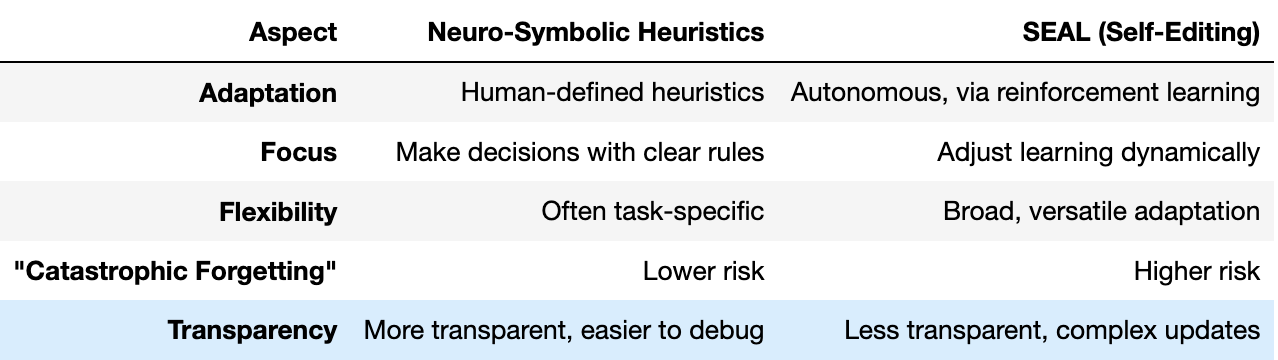

5. Comparing Neuro-Symbolic Heuristics and SEAL

Both approaches advance AI reasoning, with distinct strengths:

Neuro-symbolic methods excel in interpretability and data efficiency, while SEAL offers superior adaptability through self-editing. Understanding the individual strengths and limitations of each approach is key to maximizing their potential.

6. Conclusions:

MIT’s SEAL framework, with its ability to self-edit and adapt to new tasks (achieving 72.5% ARC performance on a curated subset and 30-40% on broader tasks), represents a pivotal step in overcoming “reasoning paralysis.” Meanwhile, neuro-symbolic methods, offering interpretability and rule-guided reasoning (~20–40% on broader ARC evaluations), provide a complementary path. These innovations, though distinct, collectively push AI toward greater flexibility, generalization, and autonomy. Despite challenges like computational costs and “catastrophic forgetting,” continued exploration and refinement of these methods will be crucial for unlocking AI’s full potential and bringing us closer to AGI [14].

Hashtags:

#AIReasoning #NeuroSymbolic #ARC #TuringTest #BoswellTest #AIResearch #AGI #MachineLearning

Acknowledgment:

I first heard about the SEAL paper in an episode of Real Coffee with Scott Adams [17]. Thanks to AI chatbots (Gemini 2.5 flash, Grok3, Perplexity AI, and Qwen alphabetically) in refining my draft through rigorous feedback on technical accuracy and aligning with my AI reasoning evaluation methodology.

References:

[1] Zhao, W. et al., (2023). A Survey of Large Language Models, arxiv.org; May 2023; see also Large Language Models, en.wikipedia.org; Jun 2025.

[2] Xu, F. et al. (2025). Towards Large Reasoning Models: A Survey of Reinforced Reasoning with Large Language Models, arxiv.org; Jan 2025.

[3] Luh, P. (2025). AI’s Reasoning Frontiers: Empirical Deep Dives, Heuristic Gaps, and the Path Forward, substack.com; June 2025.

[4] Shojaee, P. et al. (2025). The Illusion of Thinking: Understanding the Strengths and Limitations of Reasoning Models via the Lens of Problem Complexity, machinelearning.apple.com; June 2025.

[5] Wang, W. et al. (2022). Towards Data-And Knowledge-Driven AI: a Survey on Neuro-Symbolic Computing, arxiv.org; latest revision Oct 2024.

[6] Zweiger, A. et al. (2025). Self Adapting Language Models, arxiv.org; June 2025.

[7] Chollet, F. (2019). On the Measure of Intelligence, arxiv.org; Nov 2019.

[8] Luh, P. (2025). Advancing Artificial Intelligence in the Post-Turing Era: Boswell Test: Beyond the Turing Benchmark (from IJAIA; Vol 16, No 2; March 2025), substack.com; April 2025; see also IJAIA Vol16, No 2, aircconline.com, March 2025.

[9] ARC Prize, arcprize.org.

[10] Gigerenzer, G et al. (1999). Simple Heuristics That Make Us Smart. Oxford University Press; see also Meiring, S. P., (1980). Heuristics for Problem Solvers, mathstunners.org.

[11] Colelough, B. et al. (2025). Neuro-Symbolic AI in 2024: A Systematic Review, arxiv.org; April 2025.

[12] Bober-Irizar et al. (2024). Neural networks for abstraction and reasoning: Towards broad generalization in machines, arxiv.org; Feb 2024.

[13] Batorski, J. et al. (2025). Neurosymbolic Program Synthesis for ARC, arxiv.org; Jan 2025.

[14] Kurzweil, R. (2024). The Singularity Is Nearer: When We Merge with AI, penguinrandomhouse.com; Viking, June 2024; see also The Singularity Is Nearer, en.wikipedia.org; May 2025.

[15] Leffer, L., (2024). In the Race to Artificial General Intelligence, Where’s the Finish Line?, Scientific American, scientificamerican.com; June 2024.

[16] Google DeepMind, (2023). MuZero, AlphaZero, and AlphaDev: Optimizing computer systems, deepmind.google; June 2023; see also MuZero, en.wikipedia.org; June 2025.

[17] Adams, S. (2025) Real Coffee with Scott Adams (2874), podcasts.apple.com; see also Coffee with Scott Adams (2874), youtube.com, 1:04:50, June 2025.